Fico! 15+ Verità che devi conoscere Red Bull Racing F1 Wallpaper 4K 2020! Looking for the best

The traditional F-measure or balanced F-score (F 1 score) is the harmonic mean of precision and recall:= + = + = + +. F β score. A more general F score, , that uses a positive real factor , where is chosen such that recall is considered times as important as precision, is: = (+) +. In terms of Type I and type II errors this becomes: = (+) (+) + + . Two commonly used values for are 2, which.

Perhatikan gambar berikut! F1 A1 F2 A2 Jika luas penampan...

F1 score is even more unreliable in such cases, and here would yield over 97.4%, whereas informedness removes such bias and yields 0 as the probability of an informed decision for any form of guessing (here always guessing cancer).

Cara Uji Disolusi dan Perhitungan

F1 score is the harmonic mean of precision and recall and is a better measure than accuracy. In the pregnancy example, F1 Score = 2* ( 0.857 * 0.75)/(0.857 + 0.75) = 0.799. Reading List

ルノー、2019年からF1チーム名を『ルノーF1チーム』に変更 【 F1Gate

Video singkat ini menjelaskan bagaimana pembuatan tabel Confusion Matrix, serta bagaimana menghitung Akurasi, Presisi, Recall, dan F1-Score. Penjelasan mudah.

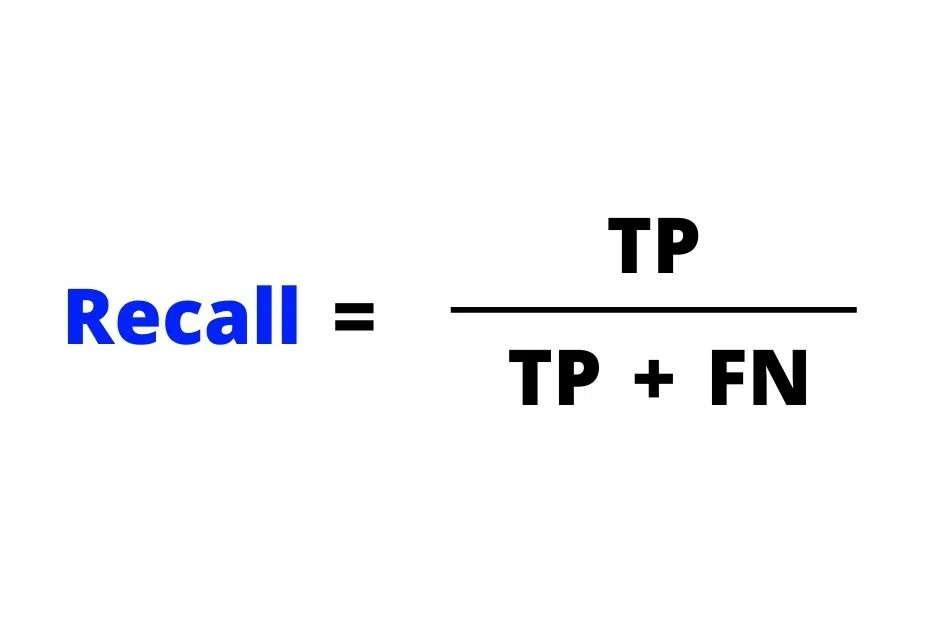

Rumus Perhitungan Akurasi Presisi Recall F Ukur

Akurasi sangat bagus kita gunakan sebagai acuan performansi algoritma JIKA dataset kita memiliki jumlah data False Negatif dan False Positif yang sangat mendekati (Symmetric). Namun jika jumlahnya tidak mendekati, maka sebaiknya gunakan F1 Score sebagai acuan.

Precision, Recall and F1 Score — Pavan Mirla

Dan meskipun ini benar, itu hanya penting sampai batas tertentu. Inilah mengapa saya akan membahas beberapa metrik kinerja lainnya seperti Confusion Matrix, Precision-Recall, dan F1-Score yang harus Anda pertimbangkan untuk digunakan bersama dengan Akurasi saat mengevaluasi model Machine Learning. Mari kita mulai.

During this webinar, we will put you in the seat of an aerodynamics engineer and investigate the

An excellent model has AUC near to the 1.0, which means it has a good measure of separability. For your model, the AUC is the combined are of the blue, green and purple rectangles, so the AUC = 0..

When to use Precision, Recall, or F1score? Dragon Forest

Ya, precision, recall dan F1-Score. Alasan saya hanya membahas ketiganya, karena buat saya, mereka dapat memperlihatkan bagaimana model kita mengambil suatu keputusan di dunia nyata, bisa dari urusan bisnis, sampai melakukan diagnosa medis. Namun, kita tidak dapat membicarakan precision, recall dan F1-Score.

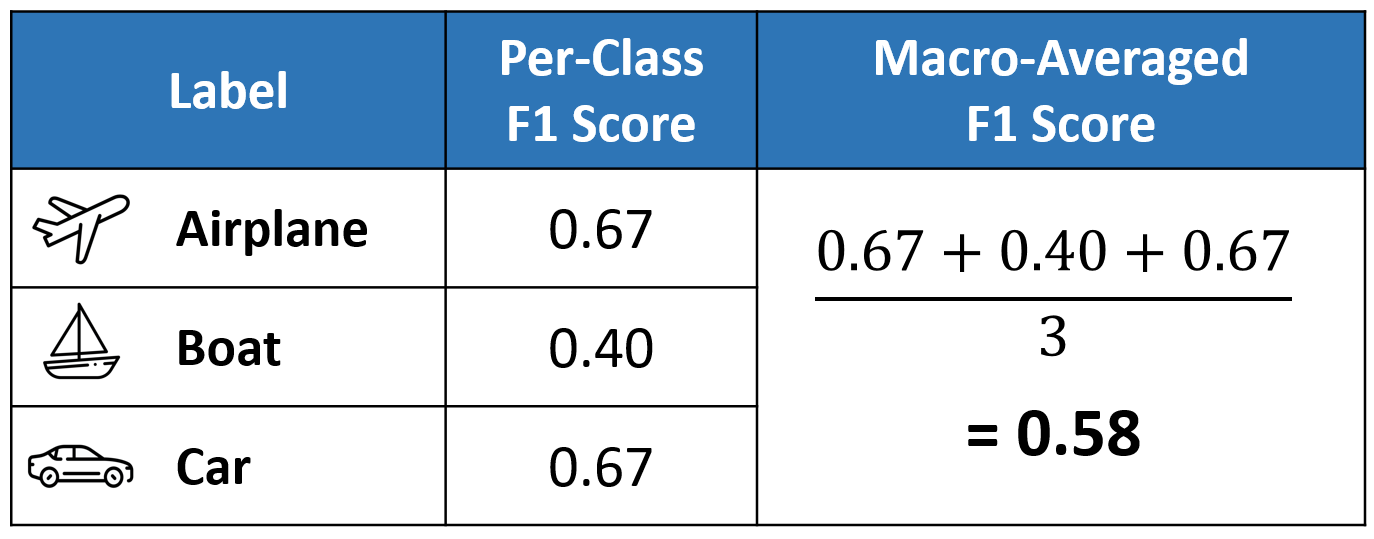

Micro, Macro & Weighted Averages of F1 Score, Clearly Explained by Leung Towards

1 Answer. In short, one strategy is to split the multiclass problem into a set of binary classification, for each class a "one vs. all others" classification. Then for each binary problem you can calculate F1, precision and recall, and if you want you can average (uniformly or weighted) the scores of each class to get one F1 score which will.

What is Formula 1's 'DRS' system and how does it work?

The F1 score can be interpreted as a harmonic mean of the precision and recall, where an F1 score reaches its best value at 1 and worst score at 0. The relative contribution of precision and recall to the F1 score are equal. The formula for the F1 score is: F1 = 2 ∗ TP 2 ∗ TP + FP + FN. Where TP is the number of true positives, FN is the.

calculating F1 score in Excel Stack Overflow

This is called the macro-averaged F1-score, or the macro-F1 for short, and is computed as a simple arithmetic mean of our per-class F1-scores: Macro-F1 = (42.1% + 30.8% + 66.7%) / 3 = 46.5% In a similar way, we can also compute the macro-averaged precision and the macro-averaged recall:

RUMUS F1 Kite OLL9 bagi PEMULA (Rubik 3x3) YouTube

If you spell out the definitions of precision (aka positive predictive value PPV) and recall (aka sensitivity), you see that they relate to one class independent of any other classes:. Recall or senstitivity is the proportion of cases correctly identified as belonging to class c among all cases that truly belong to class c. (Given we have a case truly belonging to "c", what is the probability.

Wallpaper Ferrari F1, Formula 1, rumus ferrari 1, kendaraan, mobil balap 3840x2160 CMBN3

F1 score—The F1 score is a weighted average of the precision and recall. Values range from 0 to 1, where 1 means highest accuracy. F1 score = (Precision × Recall)/[(Precision + Recall)/2] Precision-recall curve—This is a plot of precision (y-axis) and recall (x-axis), and it serves as an evaluation of the performance of an object detection.

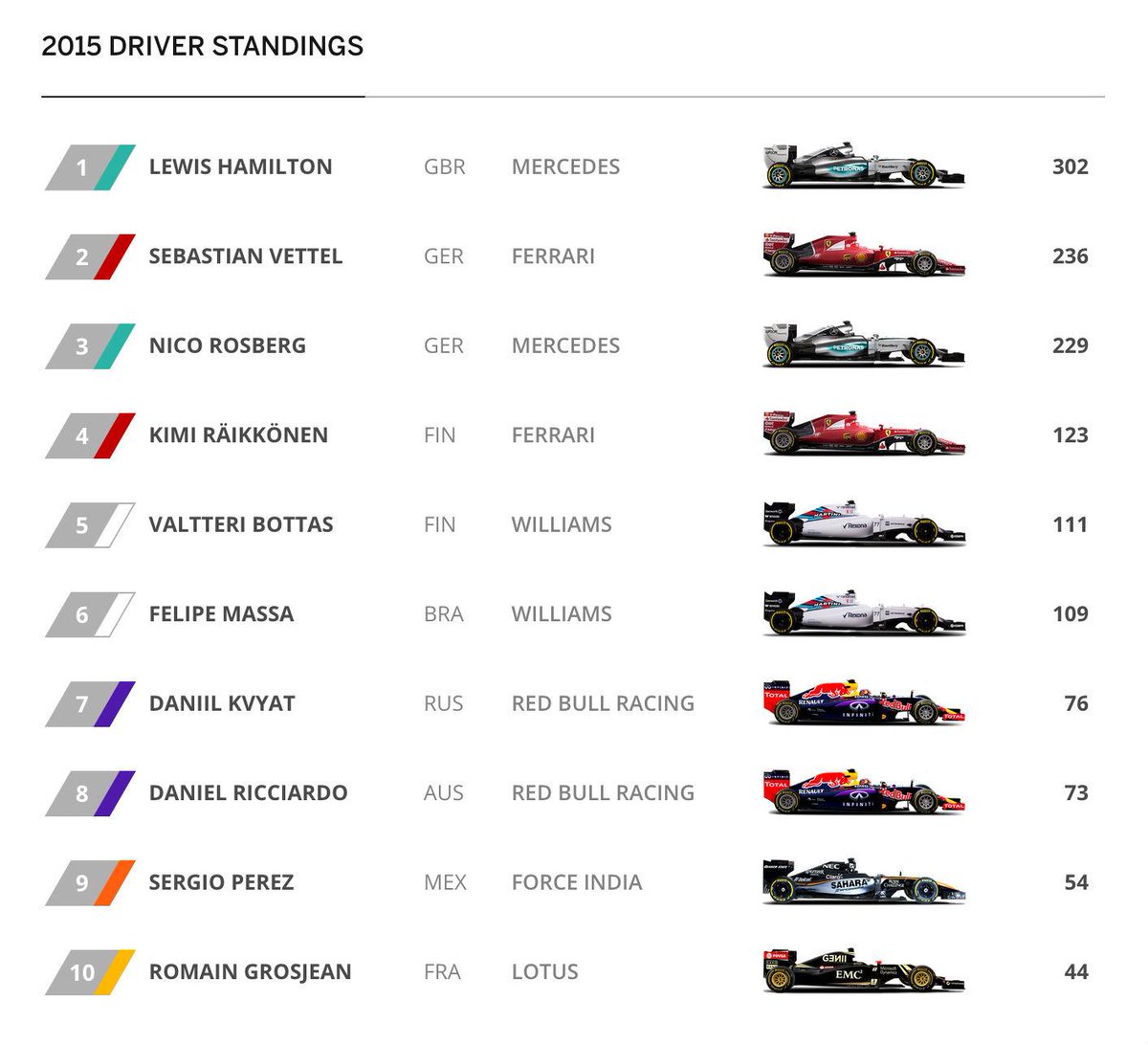

F1 Standings Today Pin on 8 • F1 Standings / View the latest results for formula 1 2021.

When working on an imbalanced dataset that demands attention to the negatives, Balanced Accuracy does better than F1. In cases where positives are as important as negatives, balanced accuracy is a better metric for this than F1. F1 is a great scoring metric for imbalanced data when more attention is needed on the positives.

17 Gambar Vektor Resultan Gaya Basgalanos

This is sometimes called the F-Score or the F1-Score and might be the most common metric used on imbalanced classification problems.. the F1-measure, which weights precision and recall equally, is the variant most often used when learning from imbalanced data. — Page 27, Imbalanced Learning: Foundations, Algorithms, and Applications, 2013.

What is Formula 1? — Guide to Formula 1

The above table sets us up nicely to compute the per-class values of precision, recall, and F1 score for each of the three classes.It is important to remember that in multi-class classification, we calculate the F1 score for each class in a One-vs-Rest (OvR) approach instead of a single overall F1 score as seen in binary classification.In this OvR approach, we determine the metrics for each.